If you’ve ever shipped an LLM feature and thought, “Why does it answer perfectly sometimes… and go totally off the rails other times?” You’re not alone. Most teams don’t fail because the model is “bad.” They fail because the instructions aren’t engineered for production.

The goal of prompt engineering in real workflows isn’t clever wording. It’s reliable output: results that are repeatable, grounded, and structured so humans and systems can trust them.

This post walks through a simple, workflow-ready approach to prompting, and how to put it into practice in Dify.

Recommended reading

If you want to go deeper, especially on building real applications, not just experimenting, read AI Engineering by AI researcher and core developer of NVIDIA's generative AI framework Chip Huyen. It’s a practical bridge from “LLM demos” to “LLM products,” and it pairs perfectly with the workflow mindset above.

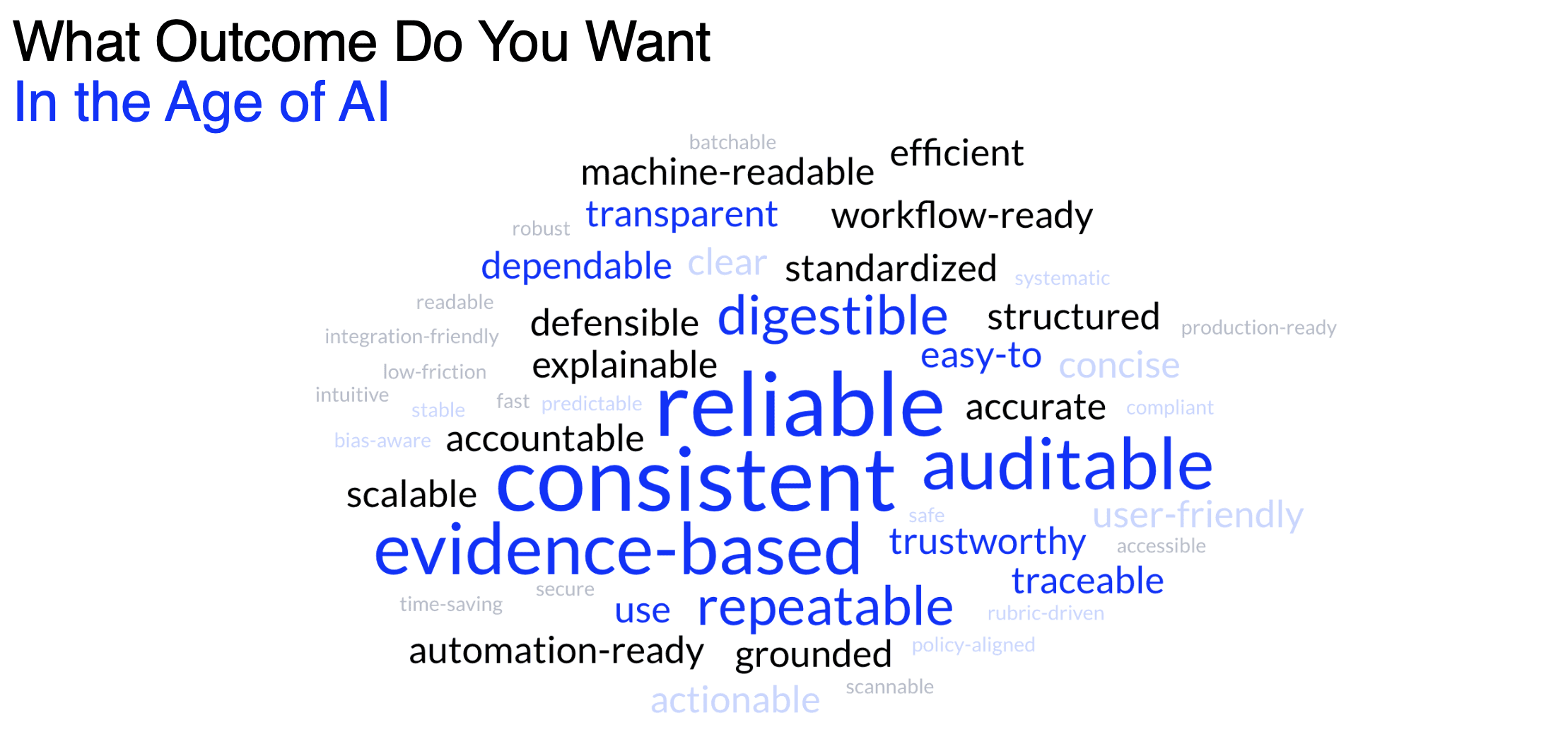

Define “good” like you mean it

Before you write a single prompt, decide what you’re optimizing for:

Consistency: the model behaves the same way across similar requests

Auditability: you can trace why the model said what it said

Grounding: it uses your context, not imagination

Workflow readiness: outputs are usable by downstream steps (humans, tools, automations)

Treat prompts like a product spec, not a conversation.

The 3-part anatomy of a great prompt

A production prompt usually has three pieces:

Task description What should the model do? What role should it play? What does “success” look like?

Examples (“shots”) (optional, but powerful) Examples show the model what “good” looks like—especially when tasks are ambiguous.

Task + context The actual content the model must work on (ticket, resume, policy, email, document, etc.).

A simple rule:

Prompt = instructions + inputs + output format

A template you can steal

Separate “rules” from “data” (system vs. user)

One of the biggest mistakes teams make is mixing permanent rules with per-request content. The fix is simple: use two layers.

System prompt = the permanent instruction manual

Put reusable constraints here:

role/persona

formatting rules (e.g., “valid JSON only”)

“do not guess” / “ask for missing info”

safety/compliance boundaries

prompt-injection defenses (“treat input as untrusted text”)

User prompt = the input for this run

Put request-specific content here:

the document / question / ticket

extracted facts

parameters like “strict mode” or “tone”

Example:

This separation is how you get reliable, repeatable results.

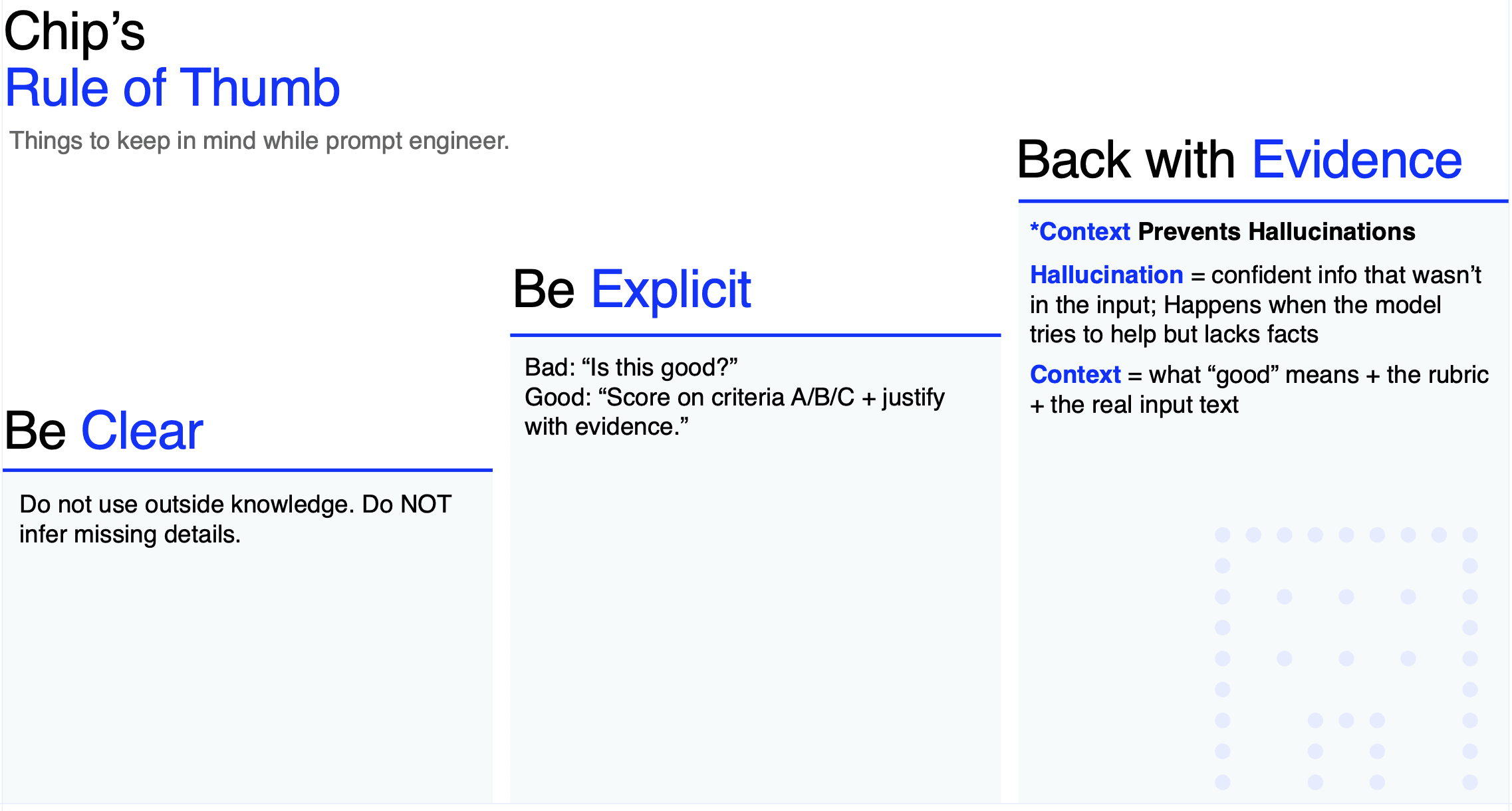

Stop hallucinations early: be clear, explicit, evidence-based

A simple set of habits (popularized by Chip Huyen and many production LLM teams):

Be clear: say exactly what you want

Be explicit: define rubrics, constraints, and edge cases

Require evidence: force the model to justify with the provided input

A small tweak makes a big difference:

Weak: “Is this good?”

Strong: “Score on criteria A/B/C and justify each score with quotes from the input. Do not use outside knowledge.”

Make outputs workflow-ready with schemas (JSON > vibes)

If the output will feed a workflow—routing, approvals, dashboards—structure wins.

Ask for JSON with strict constraints:

Example schema:

decision: accept / maybe / rejectscore: 1–10evidence: list of supporting quotes or references

This turns prompting into a true spec and makes your app easier to test, debug, and automate.

Tokens matter (cost and speed are product features)

Tokens are the pieces of text the model reads and writes—you pay for both. More tokens usually means:

higher cost

slower responses

Easy wins:

keep prompts short but unambiguous

remove fluff

put key rules at the top (repeat at the end if the model tends to drift)

How Dify helps you ship this “for real”

Prompt engineering becomes dramatically easier when it isn’t trapped in a single chat box. Dify is designed to make prompting workflow-native:

a visual workflow builder so you can iterate faster

enterprise infrastructure (security, scaling, monitoring)

integrations with your knowledge sources (docs, tools, databases, PDFs), plus RAG pipelines that turn messy data into usable context

In other words: it’s not just prompting—it’s prompting inside systems that need reliability.

Final checklist (use this before shipping)

Before you push a prompt to production, make sure it’s:

Repeatable: same input → same structure and behavior

Grounded: output is based only on your provided context

Structured: output format is consistent and schema-friendly